**This is a review post of Kenton O’Hara and others’ “Touchless Interaction in Surgery.” It contains both the summary and the critique/commentary.

Summary

Kenton O’Hara and others’ “Touchless Interaction in Surgery” focuses on the tool that provides direct image manipulation to surgeons without worrying about sterility.

1. Introduction

In medical fields, visual displays, such as MRI and CT, are essential as they support diagnosis and planning. Also, they provide a virtual “line of sight” into the body during surgery. The problem that the visual displays are constrained by typical interaction mechanisms, such as keyboard and mouse, arises in the medical fields. In surgeries, the most important matter is sterility. However, the current typical interaction mechanism is not risk free as it is direct hands-on control, meaning that surgeon has to touch the gown, the mouse, and the keyboards to manipulate the images. These unsterile objects can affect the surgery. Therefore, the author discusses about how to give surgeons direct control over image manipulation and navigation while maintaining sterility during surgery. Despite of the fact that there are some suggestions, such as barrier-based solutions or avoidances of contact with input devices, these solutions that the author suggest still involve certain risks.

2. Main Technologies of the System

The author is especially focusing on is the tool that provides direct image manipulation to surgeons without risk of sterility. The kinetic sensor and software development kit is the main technology that is used to build this system. The kinetic sensor enables surgeons to manipulate images without touching. It would read the surgeon’s gestures.

3. Socio-Technical Concerns

There are issues that people need to consider and need to be worked on. These challenges range from gesture vocabulary to the appropriate combination of input modalities and specific sensing mechanisms. Also, there are concerns about how it could affect the practices that the surgeons perform. Since the touchless interaction in medical fields, especially surgeries, is in the early stage of development, more careful researches, development, and evaluations are required. This will not only change the practice of surgery, but also will provide opportunities to change this into a new and radical way to understand the entire design and layout of surgery-operating theatres in the future.

Critique/Commentary

By developing this new tool that provides direct image manipulation to surgeons without risk of sterility, I believe that there will be great development in the medical field. It is very interesting how humans are trying to improve the current condition and state of the medical fields. I have never considered that traditional interaction mechanisms in the medical field can be problematic in a sense.

If this new tool is fully developed, I became curious of the attitude that the doctors and the medical students will have. Currently, I believe that the doctors and the medical students are in precise and meticulous preparation for any practices of surgery. However, if the doctors and the medical students start relying on the virtual reality, I questioned myself if they would prepare meticulously enough like right now. If they start relying on virtual reality, I believe that their preparation for any practices of surgery will become less meticulous and more effortless.

**Source:

- O’Hara, K., et al.(2014) “Touchless interaction in surgery,“ Communications of the ACM, Volume 57 Issue 1, pp. 70-77.

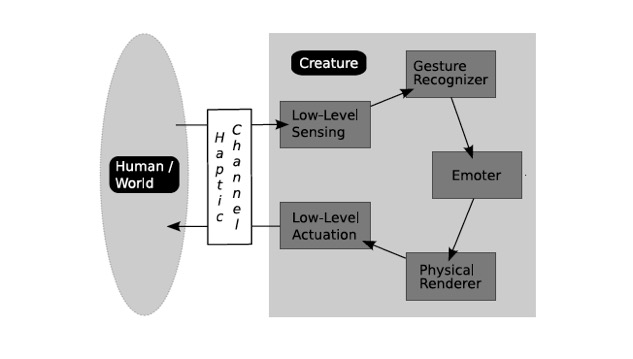

First, we isolate on a specific cell. We study the variety gestures a human use in the display of affective touch (cell 1). Then, we examine the interaction across two cells, such as the output from the human and the ability of the creature to recognize it (cell 1 –> 2). The goal here is to characterize low-level aspects of the interaction, then use these to construct higher-order models, eventually ending with an understanding of the entire interaction cycle.

First, we isolate on a specific cell. We study the variety gestures a human use in the display of affective touch (cell 1). Then, we examine the interaction across two cells, such as the output from the human and the ability of the creature to recognize it (cell 1 –> 2). The goal here is to characterize low-level aspects of the interaction, then use these to construct higher-order models, eventually ending with an understanding of the entire interaction cycle.